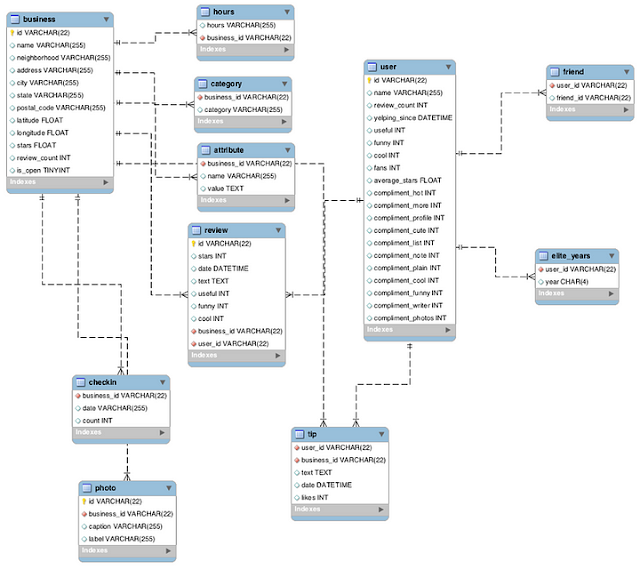

For this post we will use Yelp dataset and will formulate ideas for analysis.

First, we need to explore the dataset. Below is the structure data in table form.

|

| The Yelp Dataset |

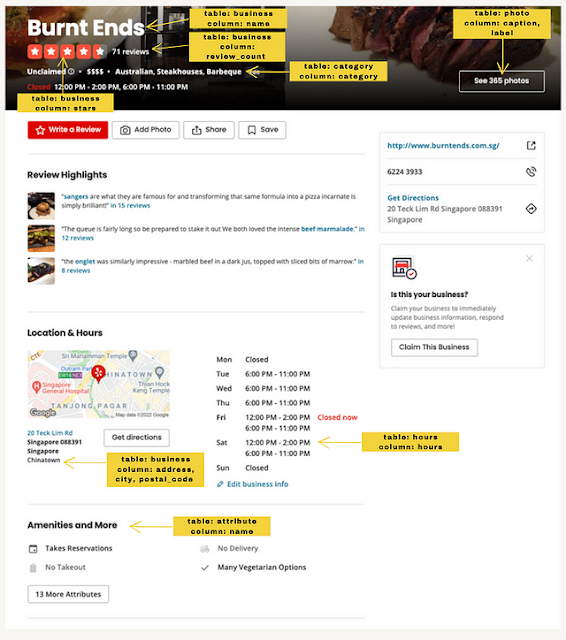

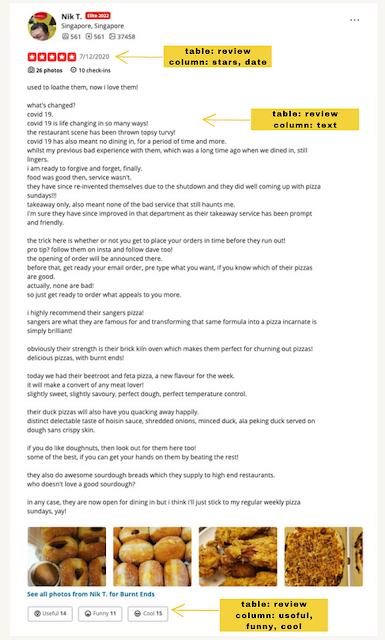

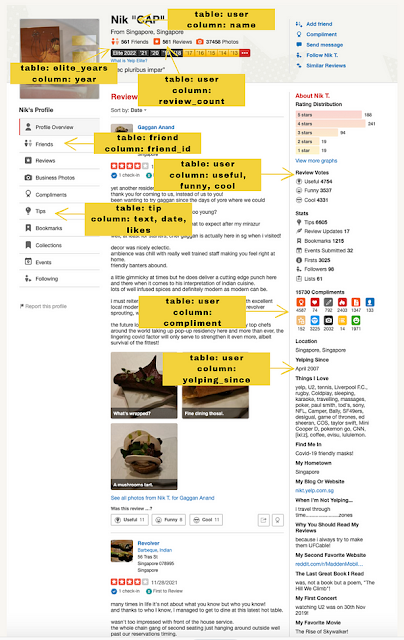

And for me to understand the above data and the entirety of its contents, I have visited the YELP website and marked the table names and column names against its pages.

|

| The Yelp Dataset - Business |

|

| The Yelp Dataset - Review |

|

| The Yelp Dataset - User Page |

Armed with understanding, profiling and establishing relationship among multiple tables, you can now look onto subjects that can be useful for different stakeholders.

Some of the business questions lingered on my mind upon seeing the above data were:

- What cities could be the best choice in setting up a business?

- Are there weight on the reviews coming from elite year users?

- What are the main words used in text reviews for sentiment analysis?

- Finding the effect of review_count and check-in on the life of a business.

I focused on the latter due to the limitations of SQLite.

SELECT business.name

, business.stars

, business.review_count

, business.is_open

, SUM(checkin.count)

FROM business INNER JOIN checkin ON business.id =checkin.business_id

GROUP BY business.name

ORDER BY SUM(checkin.count) DESCTwo (2) tables were needed to get the answer for the above analysis. I used INNER JOIN to avoid getting the ‘None’ value.

The below selected data will prove that even a company received only 1 star it is still open for business. That businesses that received highest checkin count do not necessarily have 5 stars and so on. Thus, this data means that there is no direct correlation on stars, review_count and checkin.

It would be good for YELP to indicate the current population for the neighbourhood on that business.

|

| The Yelp Dataset — Correlation of Review Count, Checkin, and Stars |